By the end of this presentation, you will:

- Understand how your browser interacts with the internet

- Be able to gather data from the internet using 3 methods:

- HTTP requests + HTML parsing

- Selenium + HTML parsing

- API requests

- Understand the advantages and shortfalls of each method

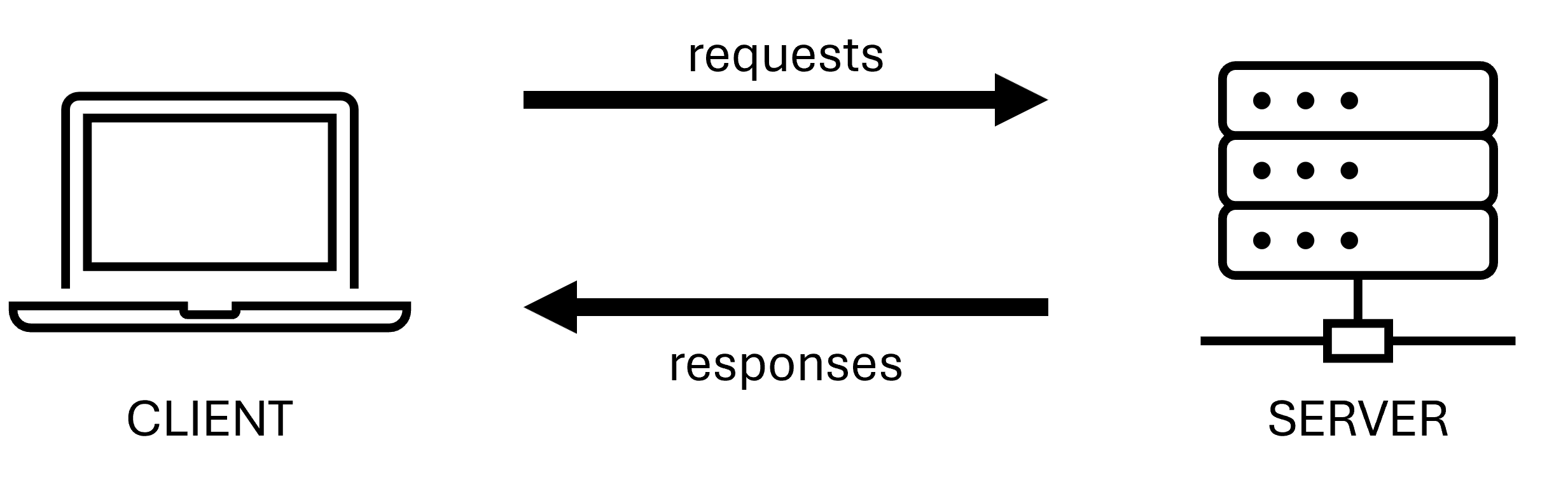

How does a website work?¶

A lot goes on behind the scenes when you view a website like https://www.brookings.edu/.

Understanding how your computer interacts with remote resources will help you become a more capable data collector.

Key terminology¶

Client¶

"Clients are the typical web user's internet-connected devices (for example, your computer connected to your Wi-Fi, or your phone connected to your mobile network) and web-accessing software available on those devices (usually a web browser like Firefox or Chrome)."[1]

Server¶

"Servers are computers that store webpages, sites, or apps. When a client device wants to access a webpage, a copy of the webpage is downloaded from the server onto the client machine to be displayed in the user's web browser."[2]

[1] Mozilla (2023). How the web works. Retrieved from https://developer.mozilla.org/en-US/docs/Learn/Getting_started_with_the_web/How_the_Web_works

[2] Ibid.

Clients send requests for information from servers. The servers send back responses.

- GET

- HEAD

- POST

- PUT

- DELETE

- CONNECT

- OPTIONS

- TRACE

- PATCH

[3] GeeksforGeeks (2023). Types of Internet Protocols. Retrieved from https://www.geeksforgeeks.org/types-of-internet-protocols/"

[4] Mozilla (2023). HTTP request methods. Retrieved from https://developer.mozilla.org/en-US/docs/Web/HTTP/Methods"

Luckily for us web scrapers, we do not need to memorize all nine types. Ninety nine percent of the time, we will only concern ourselves with GET and POST requests.

- "The GET method requests a representation of the specified resource. Requests using GET should only retrieve data."[5]

- "The POST method submits an entity to the specified resource, often causing a change in state or side effects on the server."[6]

Don't worry if you're confused. It's easy to tell which type you need to use. And today, we'll do a demo using both.

[5] Mozilla (2023). HTTP request methods. Retrieved from https://developer.mozilla.org/en-US/docs/Web/HTTP/Methods"

[6] Ibid.

Accessing a website¶

When you enter a URL (e.g., www.brookings.edu) into your browser, several things happen:

- DNS lookup

- Initial HTTP request

- Server response

- Parsing HTML + additional requests

- Assembling the page

Step 1: DNS Lookup¶

Your browser first translates the human-readable URL (e.g. http://brookings.edu/) into an IP address (e.g. 137.135.107.235) using a Domain Name System (DNS) lookup.[7]

Think of the DNS search like the phone book that links a name of a store to a street address. The street address, in this case, is an IP address which points to the server where the website is hosted. [8] [9]

[7] Fun fact: we talked about internet protocols in the previous section, but only described HTTP, which is most relevant to this application. DNS is another type of internet protocol.

[8] Mozilla (2023). How the web works. Retrieved from https://developer.mozilla.org/en-US/docs/Learn/Getting_started_with_the_web/How_the_Web_works

[9] Cloudflare. What is DNS? Retrieved from https://www.cloudflare.com/learning/dns/what-is-dns/

Step 2: HTTP Request¶

Now that your browser has the IP address of website, it sends an HTTP request to the server at this IP address. This request asks for the main HTML file of the website.

curl -X GET 'https://www.brookings.edu/' \

-H 'accept: text/html,application/xhtml+xml,application/xml;...' \

-H 'accept-language: en-US,en;q=0.9' \

-H 'cookie: _fbp=REDACTED; hubspotutk=REDACTED; ...' \

-H 'priority: u=0, i' \

-H 'referer: https://www.google.com/' \

-H 'sec-ch-ua: "Google Chrome";v="REDACTED", "Chromium";v="REDACTED", "Not.A/Brand";v="REDACTED"' \

-H 'sec-ch-ua-mobile: ?0' \

-H 'sec-ch-ua-platform: "Windows"' \

-H 'sec-fetch-dest: document' \

-H 'sec-fetch-mode: navigate' \

-H 'sec-fetch-site: cross-site' \

-H 'sec-fetch-user: ?1' \

-H 'upgrade-insecure-requests: 1' \

-H 'user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

Can you identify what type of request this is?

Step 3: Server Response¶

The server processes this request and sends back the requested HTML file. This file contains the basic structure of the webpage.

<!DOCTYPE html>

<html lang="en-US">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0, viewport-fit=cover">

<meta name='robots' content='index, follow, max-image-preview:large, max-snippet:-1, max-video-preview:-1' />

<link rel="alternate" href="https://www.brookings.edu/" hreflang="en" />

<link rel="alternate" href="https://www.brookings.edu/es/" hreflang="es" />

<link rel="alternate" href="https://www.brookings.edu/ar/" hreflang="ar" />

<link rel="alternate" href="https://www.brookings.edu/zh/" hreflang="zh" />

<link rel="alternate" href="https://www.brookings.edu/fr/" hreflang="fr" />

<link rel="alternate" href="https://www.brookings.edu/ko/" hreflang="ko" />

<link rel="alternate" href="https://www.brookings.edu/ru/" hreflang="ru" />

<!-- This site is optimized with the Yoast SEO plugin v22.0 - https://yoast.com/wordpress/plugins/seo/ -->

<title>Brookings - Quality. Independence. Impact.</title>

<meta name="description" content="The Brookings Institution is a nonprofit public policy organization based in Washington, DC. Our mission is to conduct in-depth research that leads to new ideas for solving problems facing society at the local, national and global level." />

<link rel="canonical" href="https://www.brookings.edu/" />

<meta property="og:locale" content="en_US" />

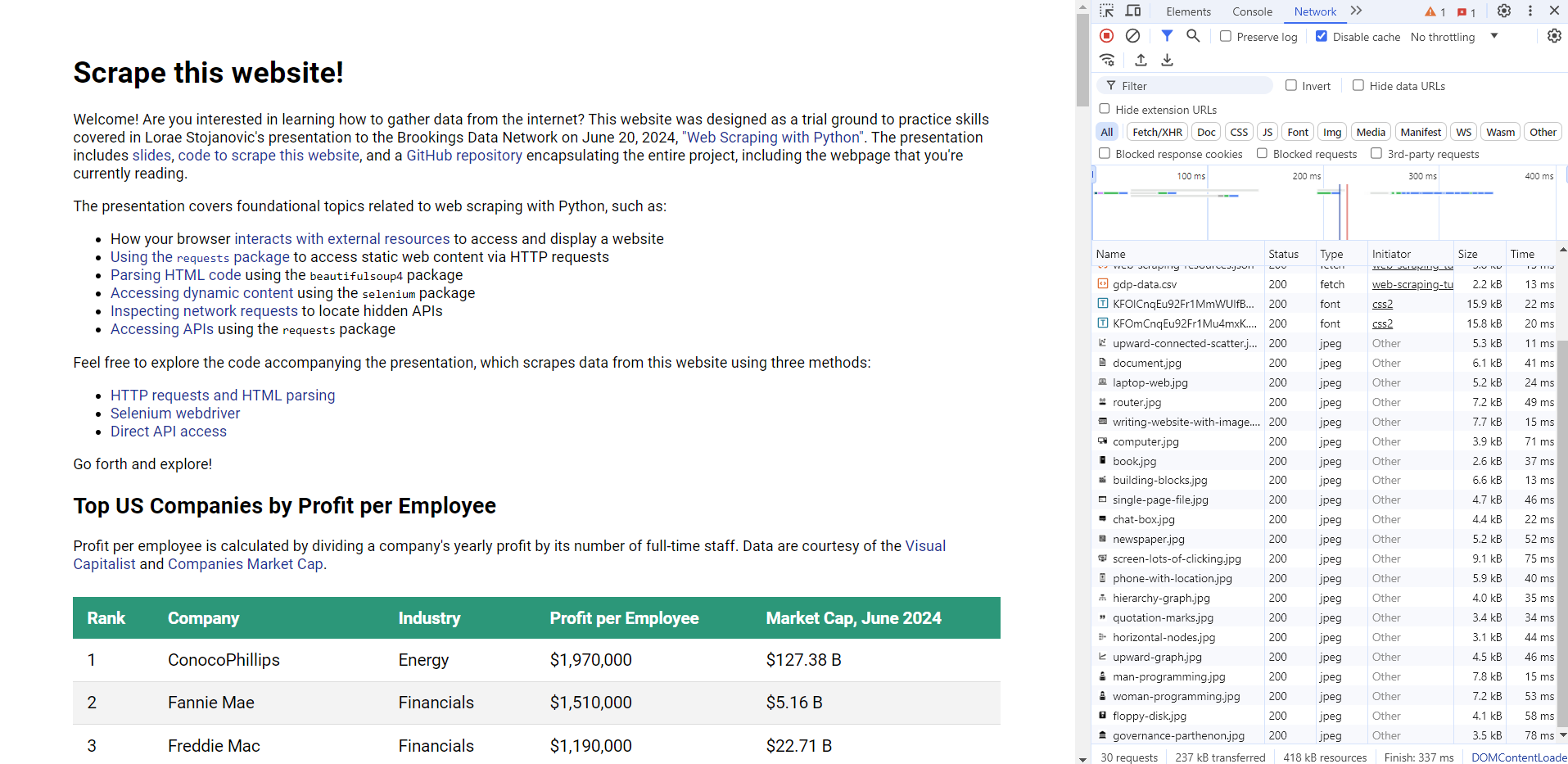

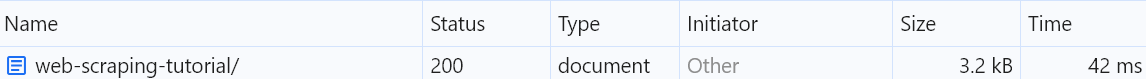

Step 4: Parsing HTML + additional requests¶

Your browser starts parsing the HTML file: reading its instructions to turn it into a user-friendly webpage.

Oftentimes, this code contains references to additional external resources it needs to display the webpage, such as:

- CSS (Cascading Style Sheets): To set default aesthetics like fonts, colors, and line spacing

- JavaScript code: To add interactivity and dynamic content

- Images and videos: To incorporate multimedia content

- API (Application Programming Interface) responses: To obtain data from servers, often in JSON format, to display on the webpage

As your browser encounters additional references to files in the HTML code, it makes HTTP requests to the server to retrieve them.

Step 5: Assembling the page¶

After downloading all the external resources needed to build the webpage, your browser will compile and execute any JavaScript code that it received.

With all the downloaded elements in place, the browser processes the HTML, the CSS style sheets, and combines it with other resources (such as downloaded fonts, photos, videos, and data downloaded from APIs) to paint the webpage to your screen.[10]

[10] Mozilla (2023). Populating the page: how browsers work. Retrieved from https://developer.mozilla.org/en-US/docs/Web/Performance/How_browsers_work"

Web scraping basics¶

There are several ways to collect data online. The strategy you choose must be catered to the website in question.

Types of web scraping¶

I like to categorize web data collection techniques by the point in the client-server interaction that they interact.

- DNS lookup

- Initial HTTP request

- Server response

- HTML scraping parses the main HTML file for the webpage to extract data.

- Parsing HTML + additional requests

- Technically not classified as "web scraping," APIs are a neat way to access data. They are usually requested at this point in the client-server interaction.

- Assembling the page

- Selenium behaves like a browser to view content that is otherwise not available in HTML files, because it is dynamically rendered using JavaScript code.

Sample code: Web requests & HTML parsing¶

Knowing how to make HTTP requests using the Python requests library and parsing HTML responses are two foundational skills in our web scraping toolkit that will help us tackle more complicated tasks later.

pip install requests beautifulsoup4

Now that we've confirmed installation, we import the needed libraries.

import requests

from bs4 import BeautifulSoup

[11] It's a best practice to use environments to control package dependencies. This project uses Poetry: https://python-poetry.org/

Time to get scraping. Recall the earlier GET request we saw? It had a lot of information in it, in the form of headers.

curl -X GET 'https://www.brookings.edu/' \

-H 'accept: text/html,application/xhtml+xml,application/xml;...' \

-H 'accept-language: en-US,en;q=0.9' \

-H 'cookie: _fbp=REDACTED; hubspotutk=REDACTED; ...' \

-H 'priority: u=0, i' \

-H 'referer: https://www.google.com/' \

-H 'sec-ch-ua: "Google Chrome";v="REDACTED", "Chromium";v="REDACTED", "Not.A/Brand";v="REDACTED"' \

-H 'sec-ch-ua-mobile: ?0' \

-H 'sec-ch-ua-platform: "Windows"' \

-H 'sec-fetch-dest: document' \

-H 'sec-fetch-mode: navigate' \

-H 'sec-fetch-site: cross-site' \

-H 'sec-fetch-user: ?1' \

-H 'upgrade-insecure-requests: 1' \

-H 'user-agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/125.0.0.0 Safari/537.36'

As you can see, headers contain quite a bit of information. Unless otherwise required, I try to keep my headers on the lighter side.

These are the headers I usually provide in my HTTP requests. Sometimes, I get blocked - in which case, I change the user-agent string slightly.

Note that headers must be formatted as a dictionary.

my_headers = {

'User-Agent': (

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) '

'AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/122.0.6261.112 Safari/537.36'

),

'Accept': (

'text/html,application/xhtml+xml,application/xml;q=0.9,'

'image/avif,image/webp,image/apng,*/*;q=0.8,'

'application/signed-exchange;v=b3;q=0.7'

)

}

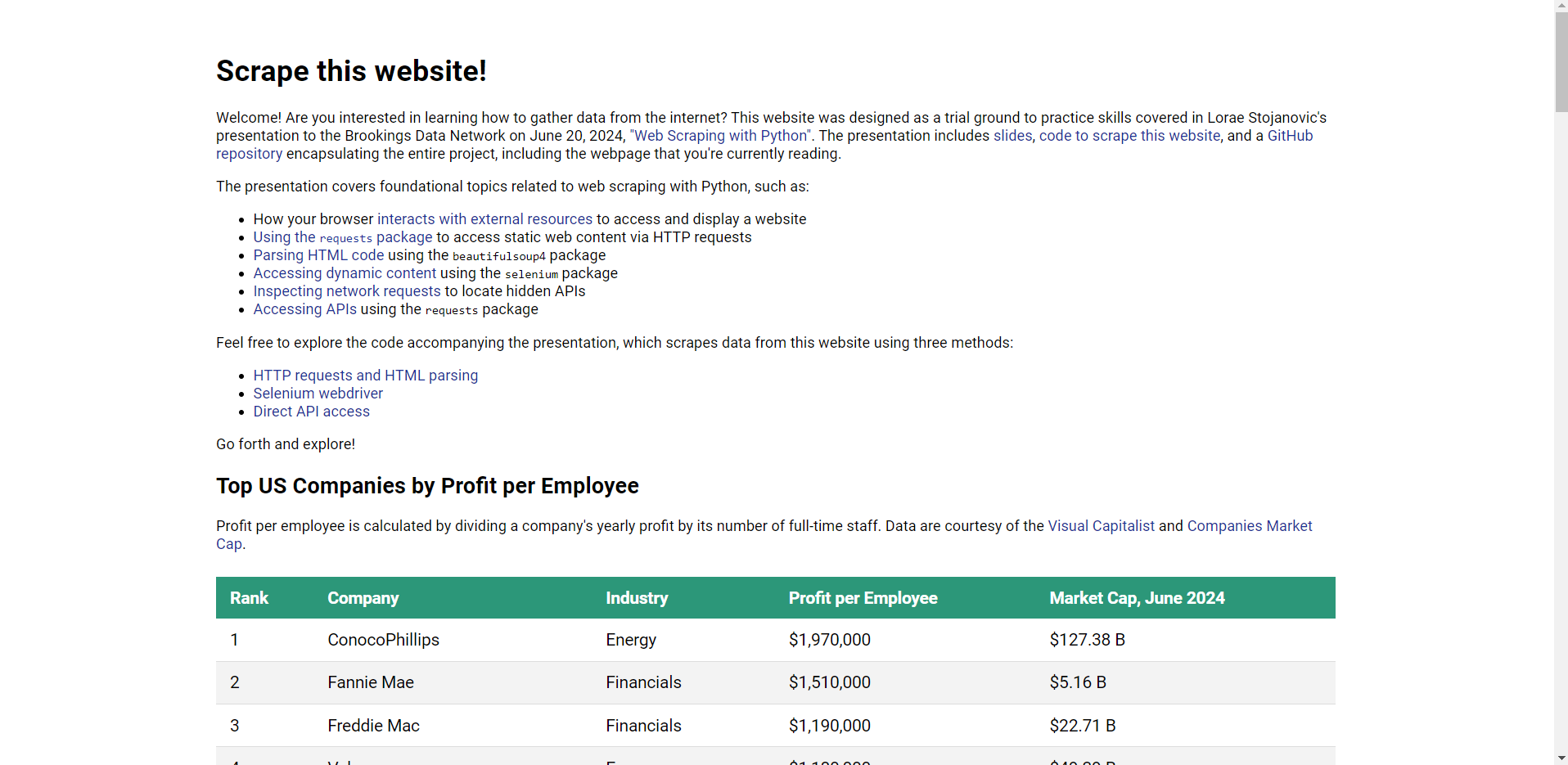

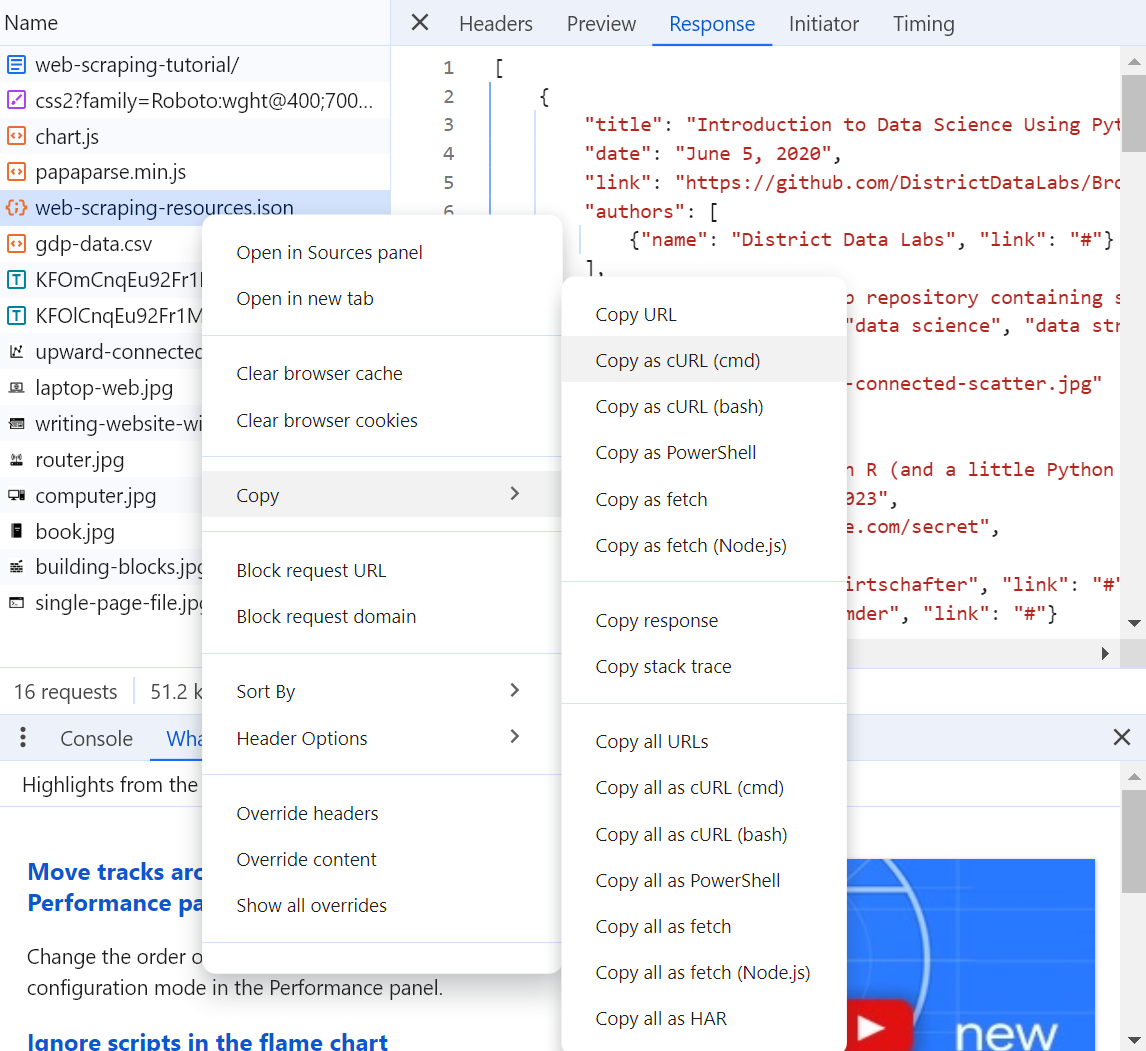

Today, we'll be scraping a website that I created to practice on! Our URL is: https://lorae.github.io/web-scraping-tutorial/

my_url = "https://lorae.github.io/web-scraping-tutorial/"

We're interested specifically in scraping the company names and profit per employee of the entries in the table in the webpage.

In order to do this, we have to first get the HTML file for the website.

In these next steps, we bundle our arguments together into an instance of the Request object from the requests library. We then initialize the session, prepare the request for sending, and save the response.

session_arguments = requests.Request(method='GET',

url=my_url,

headers=my_headers)

session = requests.Session()

prepared_request = session.prepare_request(session_arguments)

response: requests.Response = session.send(prepared_request)

Let's see what the response was. A response code of 200 indicates a successful response, with the server returning the required resource.

print(response.status_code)

200

Success!

More interestingly, let's look at the response content.

print(response.text)

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Web Scraping Resources</title>

<link rel="stylesheet" href="web_content/css/styles.css">

<link href="https://fonts.googleapis.com/css2?family=Roboto:wght@400;700&display=swap" rel="stylesheet">

<script src="https://cdn.jsdelivr.net/npm/chart.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/PapaParse/5.3.0/papaparse.min.js"></script>

</head>

<body>

<div class="container">

<h1>Scrape this website!</h1>

<p>Welcome! Are you interested in learning how to gather data from the internet? This website was designed as a trial ground to practice skills covered in Lorae Stojanovic's presentation to the Brookings Data Network on June 20, 2024, <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">"Web Scraping with Python"</a>. The presentation includes <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">slides</a>, <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">code to scrape this website</a>, and a <a href="https://github.com/lorae/web-scraping-tutorial">GitHub repository</a> encapsulating the entire project, including the webpage that you're currently reading.</p>

<p>The presentation covers foundational topics related to web scraping with Python, such as:</p>

<ul>

<li>How your browser <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/2/5">interacts with external resources</a> to access and display a website</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/4/2">Using the <code>requests</code> package</a> to access static web content via HTTP requests</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/4/9">Parsing HTML code</a> using the <code>beautifulsoup4</code> package</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/5">Accessing dynamic content</a> using the <code>selenium</code> package</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/6/1">Inspecting network requests</a> to locate hidden APIs</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/6/10">Accessing APIs</a> using the <code>requests</code> package</li>

</ul>

<p>Feel free to explore the code accompanying the presentation, which scrapes data from this website using three methods:</p>

<ul>

<li><a href="#">HTTP requests and HTML parsing</a></li>

<li><a href="#">Selenium webdriver</a></li>

<li><a href="#">Direct API access</a></li>

</ul>

<p>Go forth and explore!</p>

<h2>Top US Companies by Profit per Employee</h2>

<p>Profit per employee is calculated by dividing a company's yearly profit by its number of full-time staff. Data are courtesy of the <a href="https://www.visualcapitalist.com/profit-per-employee-top-u-s-companies-ranking/">Visual Capitalist</a> and <a href="https://companiesmarketcap.com/">Companies Market Cap</a>.</p>

<table class="styled-table">

<thead>

<tr class="header-row">

<th class="header-cell rank">Rank</th>

<th class="header-cell company">Company</th>

<th class="header-cell industry">Industry</th>

<th class="header-cell profit-per-employee">Profit per Employee</th>

<th class="header-cell market-cap">Market Cap, June 2024</th>

</tr>

</thead>

<tbody>

<tr class="data-row">

<td class="data-cell rank">1</td>

<td class="data-cell company">ConocoPhillips</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,970,000</td>

<td class="data-cell market-cap">$127.38 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">2</td>

<td class="data-cell company">Fannie Mae</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$1,510,000</td>

<td class="data-cell market-cap">$5.16 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">3</td>

<td class="data-cell company">Freddie Mac</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$1,190,000</td>

<td class="data-cell market-cap">$22.71 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">4</td>

<td class="data-cell company">Valero</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,180,000</td>

<td class="data-cell market-cap">$49.39 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">5</td>

<td class="data-cell company">Occidental Petroleum</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,110,000</td>

<td class="data-cell market-cap">$54.31 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">6</td>

<td class="data-cell company">Cheniere Energy</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$921,000</td>

<td class="data-cell market-cap">$36.88 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">7</td>

<td class="data-cell company">ExxonMobil</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$899,000</td>

<td class="data-cell market-cap">$490.67 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">8</td>

<td class="data-cell company">Phillips 66</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$848,000</td>

<td class="data-cell market-cap">$57.59 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">9</td>

<td class="data-cell company">Marathon Petroleum</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$815,000</td>

<td class="data-cell market-cap">$60.75 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">10</td>

<td class="data-cell company">Chevron</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$809,000</td>

<td class="data-cell market-cap">$280.40 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">11</td>

<td class="data-cell company">PBF Energy</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$798,000</td>

<td class="data-cell market-cap">$5.10 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">12</td>

<td class="data-cell company">Enterprise Products</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$752,000</td>

<td class="data-cell market-cap">$61.45 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">13</td>

<td class="data-cell company">Apple</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$609,000</td>

<td class="data-cell market-cap">$3,245 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">14</td>

<td class="data-cell company">Broadcom</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$575,000</td>

<td class="data-cell market-cap">$839.05 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">15</td>

<td class="data-cell company">HF Sinclair</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$560,000</td>

<td class="data-cell market-cap">$10.01 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">16</td>

<td class="data-cell company">D. R. Horton</td>

<td class="data-cell industry">Construction</td>

<td class="data-cell profit-per-employee">$433,000</td>

<td class="data-cell market-cap">$45.90 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">17</td>

<td class="data-cell company">AIG</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$392,000</td>

<td class="data-cell market-cap">$49.19 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">18</td>

<td class="data-cell company">Lennar</td>

<td class="data-cell industry">Construction</td>

<td class="data-cell profit-per-employee">$384,000</td>

<td class="data-cell market-cap">$40.93 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">19</td>

<td class="data-cell company">Energy Transfer</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$379,000</td>

<td class="data-cell market-cap">$52.18 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">20</td>

<td class="data-cell company">Pfizer</td>

<td class="data-cell industry">Healthcare</td>

<td class="data-cell profit-per-employee">$378,000</td>

<td class="data-cell market-cap">$155.32 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">21</td>

<td class="data-cell company">Netflix</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$351,000</td>

<td class="data-cell market-cap">$295.45 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">22</td>

<td class="data-cell company">Microsoft</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$329,000</td>

<td class="data-cell market-cap">$3,317 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">23</td>

<td class="data-cell company">Alphabet</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$315,000</td>

<td class="data-cell market-cap">$2,170 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">24</td>

<td class="data-cell company">Meta</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$268,000</td>

<td class="data-cell market-cap">$1,266 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">25</td>

<td class="data-cell company">Qualcomm</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$254,000</td>

<td class="data-cell market-cap">$253.43 B</td>

</tr>

</tbody>

</table>

<h2>Learning resources</h2>

<div class="promo-grid__promos" id="resources-container">

<!-- Data will be populated here -->

</div>

</div>

<script>

let resourcesData = [];

async function fetchResources() {

const response = await fetch('web_content/data/web-scraping-resources.json');

resourcesData = await response.json();

displayResources(resourcesData);

}

function displayResources(data) {

const container = document.getElementById('resources-container');

container.innerHTML = '';

data.forEach(resource => {

const card = document.createElement('div');

card.className = 'digest-card';

const topSection = document.createElement('div');

topSection.className = 'digest-card__top';

const image = document.createElement('img');

image.className = 'digest-card__image';

image.src = resource.image;

image.alt = `${resource.title} image`;

topSection.appendChild(image);

const textContainer = document.createElement('div');

textContainer.className = 'digest-card__text';

const title = document.createElement('div');

title.className = 'digest-card__title';

title.innerHTML = `<a href="${resource.link}">${resource.title}</a>`;

textContainer.appendChild(title);

const date = document.createElement('div');

date.className = 'digest-card__date';

date.innerHTML = `<span class="digest-card__label">${resource.date}</span>`;

textContainer.appendChild(date);

const authors = document.createElement('div');

authors.className = 'digest-card__items';

authors.innerHTML = `<span class="digest-card__label">Author(s) - </span>${resource.authors.map(author => `<span><a href="${author.link}">${author.name}</a></span>`).join(', ')}`;

textContainer.appendChild(authors);

topSection.appendChild(textContainer);

card.appendChild(topSection);

const description = document.createElement('div');

description.className = 'digest-card__summary';

description.textContent = resource.description;

card.appendChild(description);

const keywords = document.createElement('div');

keywords.className = 'digest-card__keywords';

keywords.innerHTML = `<span class="digest-card__label">Keywords: </span>${resource.keywords.join(', ')}`;

card.appendChild(keywords);

container.appendChild(card);

});

}

fetchResources();

// Function to fetch and display GDP data

async function fetchGDPData() {

const response = await fetch('web_content/data/gdp-data.csv');

const data = await response.text();

const parsedData = Papa.parse(data, { header: true }).data;

const labels = parsedData.map(row => row.Date);

const gdpValues = parsedData.map(row => parseFloat(row.GDP));

const ctx = document.getElementById('gdpChart').getContext('2d');

new Chart(ctx, {

type: 'line',

data: {

labels: labels,

datasets: [{

label: 'US GDP',

data: gdpValues,

borderColor: 'rgba(75, 192, 192, 1)',

backgroundColor: 'rgba(75, 192, 192, 0.2)',

borderWidth: 1

}]

},

options: {

responsive: true,

scales: {

x: {

display: true,

title: {

display: true,

text: 'Year'

}

},

y: {

display: true,

title: {

display: true,

text: 'GDP (in billions)'

}

}

},

plugins: {

tooltip: {

enabled: true,

mode: 'nearest',

intersect: false,

callbacks: {

label: function(context) {

let label = context.dataset.label || '';

if (label) {

label += ': ';

}

if (context.parsed.y !== null) {

label += new Intl.NumberFormat('en-US', { style: 'currency', currency: 'USD' }).format(context.parsed.y);

}

return label;

}

}

}

}

}

});

}

fetchGDPData();

</script>

<div class = "container">

<h2>Interactive Graph</h2>

<p>The following graph contains United States nominal Gross Domestic Product data from Q1 1947 to Q1 2024. Data is courtesy of the Federal Reserve Bank of St. Louis "FRED" service. </p>

<div class="container">

<canvas id="gdpChart" width="400" height="200"></canvas>

</div>

</div>

</body>

</html>

HTML parsing with beautifulsoup4¶

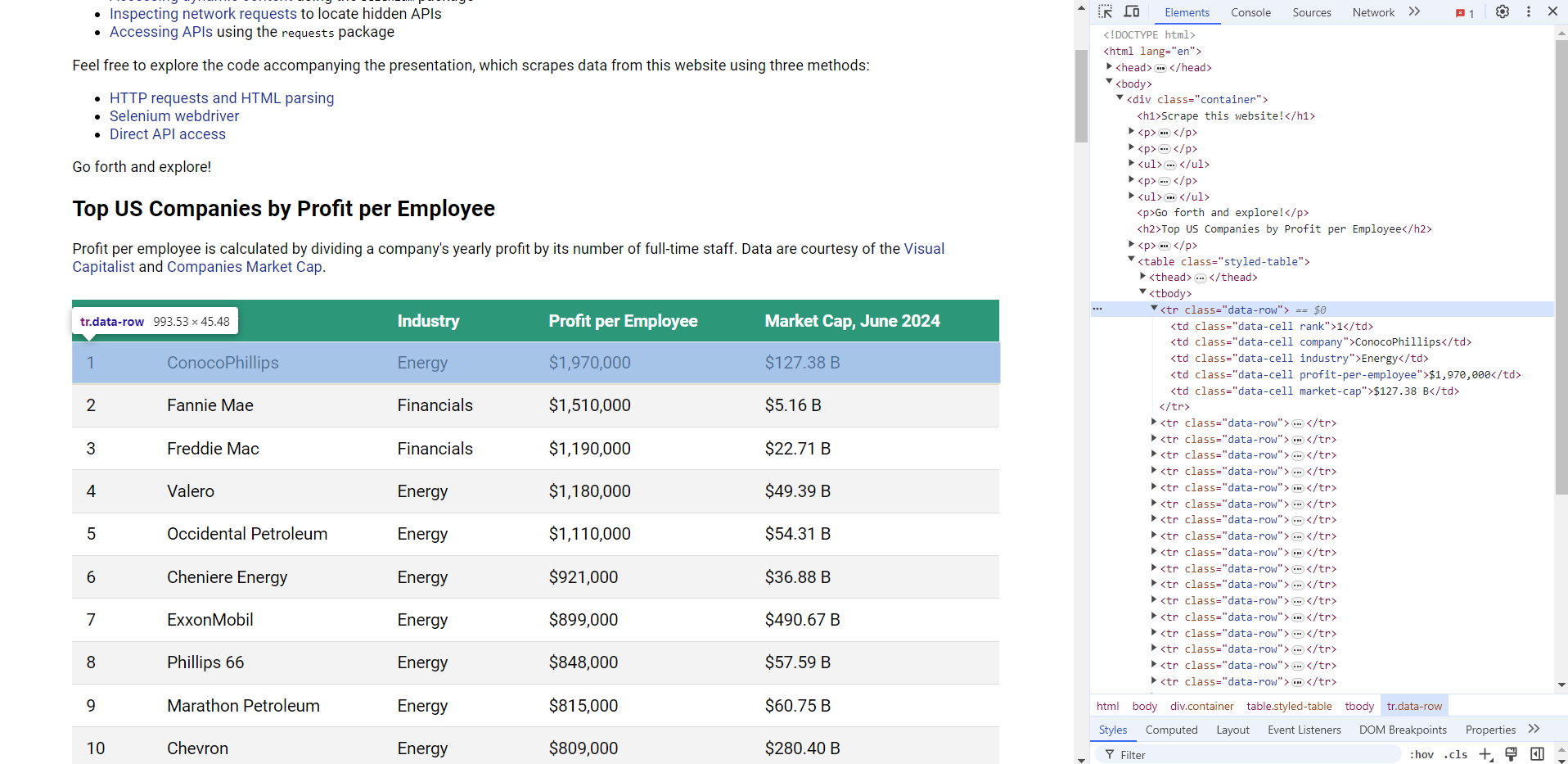

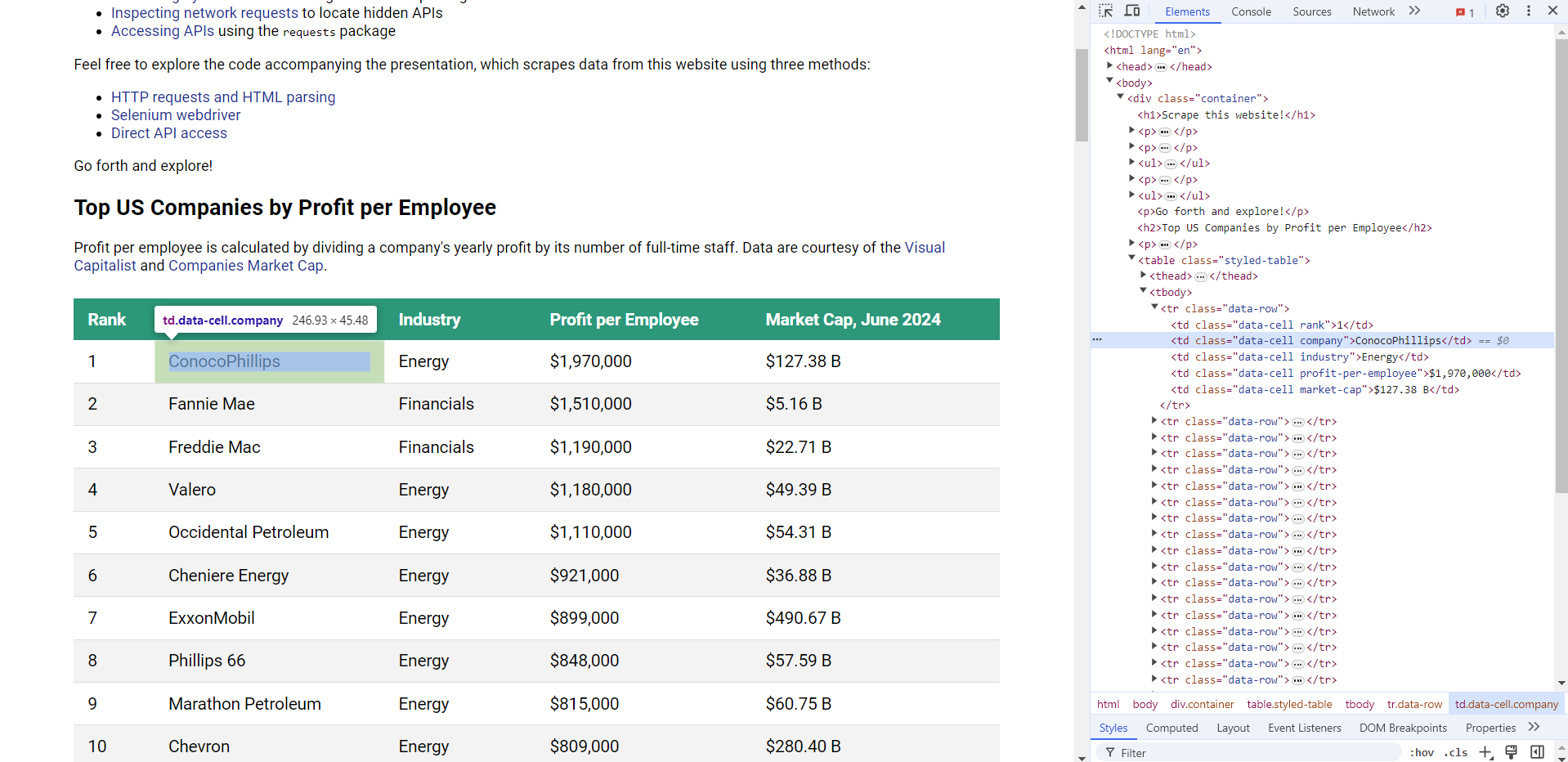

The response from the website may look like a mess, but don't worry. There's a package that makes picking data from the HTML code easy: It's called beautifulsoup4. We'll use it in conjunction with a helpful browser tool called "Inspect element".

(And, later in this presentation, we'll use "View page source" and "Network requests": Two other old favorites.)

Simply right click anywhere on the website with your browser open, and select the "Inspect element" option.

The data we want is contained in a <tr> element with class data-row. And after further inspection, we find that the company name is in a <td> child element with classes data-cell and company. The profit per employee is a sibling <td> element with classes data-cell and profit-per-employee.

Let's parse the HTML of the response from the web server.

soup = BeautifulSoup(response.content, 'html.parser')

print(soup)

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8"/>

<meta content="width=device-width, initial-scale=1.0" name="viewport"/>

<title>Web Scraping Resources</title>

<link href="web_content/css/styles.css" rel="stylesheet"/>

<link href="https://fonts.googleapis.com/css2?family=Roboto:wght@400;700&display=swap" rel="stylesheet"/>

<script src="https://cdn.jsdelivr.net/npm/chart.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/PapaParse/5.3.0/papaparse.min.js"></script>

</head>

<body>

<div class="container">

<h1>Scrape this website!</h1>

<p>Welcome! Are you interested in learning how to gather data from the internet? This website was designed as a trial ground to practice skills covered in Lorae Stojanovic's presentation to the Brookings Data Network on June 20, 2024, <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">"Web Scraping with Python"</a>. The presentation includes <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">slides</a>, <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">code to scrape this website</a>, and a <a href="https://github.com/lorae/web-scraping-tutorial">GitHub repository</a> encapsulating the entire project, including the webpage that you're currently reading.</p>

<p>The presentation covers foundational topics related to web scraping with Python, such as:</p>

<ul>

<li>How your browser <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/2/5">interacts with external resources</a> to access and display a website</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/4/2">Using the <code>requests</code> package</a> to access static web content via HTTP requests</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/4/9">Parsing HTML code</a> using the <code>beautifulsoup4</code> package</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/5">Accessing dynamic content</a> using the <code>selenium</code> package</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/6/1">Inspecting network requests</a> to locate hidden APIs</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/6/10">Accessing APIs</a> using the <code>requests</code> package</li>

</ul>

<p>Feel free to explore the code accompanying the presentation, which scrapes data from this website using three methods:</p>

<ul>

<li><a href="#">HTTP requests and HTML parsing</a></li>

<li><a href="#">Selenium webdriver</a></li>

<li><a href="#">Direct API access</a></li>

</ul>

<p>Go forth and explore!</p>

<h2>Top US Companies by Profit per Employee</h2>

<p>Profit per employee is calculated by dividing a company's yearly profit by its number of full-time staff. Data are courtesy of the <a href="https://www.visualcapitalist.com/profit-per-employee-top-u-s-companies-ranking/">Visual Capitalist</a> and <a href="https://companiesmarketcap.com/">Companies Market Cap</a>.</p>

<table class="styled-table">

<thead>

<tr class="header-row">

<th class="header-cell rank">Rank</th>

<th class="header-cell company">Company</th>

<th class="header-cell industry">Industry</th>

<th class="header-cell profit-per-employee">Profit per Employee</th>

<th class="header-cell market-cap">Market Cap, June 2024</th>

</tr>

</thead>

<tbody>

<tr class="data-row">

<td class="data-cell rank">1</td>

<td class="data-cell company">ConocoPhillips</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,970,000</td>

<td class="data-cell market-cap">$127.38 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">2</td>

<td class="data-cell company">Fannie Mae</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$1,510,000</td>

<td class="data-cell market-cap">$5.16 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">3</td>

<td class="data-cell company">Freddie Mac</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$1,190,000</td>

<td class="data-cell market-cap">$22.71 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">4</td>

<td class="data-cell company">Valero</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,180,000</td>

<td class="data-cell market-cap">$49.39 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">5</td>

<td class="data-cell company">Occidental Petroleum</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,110,000</td>

<td class="data-cell market-cap">$54.31 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">6</td>

<td class="data-cell company">Cheniere Energy</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$921,000</td>

<td class="data-cell market-cap">$36.88 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">7</td>

<td class="data-cell company">ExxonMobil</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$899,000</td>

<td class="data-cell market-cap">$490.67 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">8</td>

<td class="data-cell company">Phillips 66</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$848,000</td>

<td class="data-cell market-cap">$57.59 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">9</td>

<td class="data-cell company">Marathon Petroleum</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$815,000</td>

<td class="data-cell market-cap">$60.75 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">10</td>

<td class="data-cell company">Chevron</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$809,000</td>

<td class="data-cell market-cap">$280.40 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">11</td>

<td class="data-cell company">PBF Energy</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$798,000</td>

<td class="data-cell market-cap">$5.10 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">12</td>

<td class="data-cell company">Enterprise Products</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$752,000</td>

<td class="data-cell market-cap">$61.45 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">13</td>

<td class="data-cell company">Apple</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$609,000</td>

<td class="data-cell market-cap">$3,245 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">14</td>

<td class="data-cell company">Broadcom</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$575,000</td>

<td class="data-cell market-cap">$839.05 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">15</td>

<td class="data-cell company">HF Sinclair</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$560,000</td>

<td class="data-cell market-cap">$10.01 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">16</td>

<td class="data-cell company">D. R. Horton</td>

<td class="data-cell industry">Construction</td>

<td class="data-cell profit-per-employee">$433,000</td>

<td class="data-cell market-cap">$45.90 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">17</td>

<td class="data-cell company">AIG</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$392,000</td>

<td class="data-cell market-cap">$49.19 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">18</td>

<td class="data-cell company">Lennar</td>

<td class="data-cell industry">Construction</td>

<td class="data-cell profit-per-employee">$384,000</td>

<td class="data-cell market-cap">$40.93 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">19</td>

<td class="data-cell company">Energy Transfer</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$379,000</td>

<td class="data-cell market-cap">$52.18 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">20</td>

<td class="data-cell company">Pfizer</td>

<td class="data-cell industry">Healthcare</td>

<td class="data-cell profit-per-employee">$378,000</td>

<td class="data-cell market-cap">$155.32 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">21</td>

<td class="data-cell company">Netflix</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$351,000</td>

<td class="data-cell market-cap">$295.45 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">22</td>

<td class="data-cell company">Microsoft</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$329,000</td>

<td class="data-cell market-cap">$3,317 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">23</td>

<td class="data-cell company">Alphabet</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$315,000</td>

<td class="data-cell market-cap">$2,170 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">24</td>

<td class="data-cell company">Meta</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$268,000</td>

<td class="data-cell market-cap">$1,266 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">25</td>

<td class="data-cell company">Qualcomm</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$254,000</td>

<td class="data-cell market-cap">$253.43 B</td>

</tr>

</tbody>

</table>

<h2>Learning resources</h2>

<div class="promo-grid__promos" id="resources-container">

<!-- Data will be populated here -->

</div>

</div>

<script>

let resourcesData = [];

async function fetchResources() {

const response = await fetch('web_content/data/web-scraping-resources.json');

resourcesData = await response.json();

displayResources(resourcesData);

}

function displayResources(data) {

const container = document.getElementById('resources-container');

container.innerHTML = '';

data.forEach(resource => {

const card = document.createElement('div');

card.className = 'digest-card';

const topSection = document.createElement('div');

topSection.className = 'digest-card__top';

const image = document.createElement('img');

image.className = 'digest-card__image';

image.src = resource.image;

image.alt = `${resource.title} image`;

topSection.appendChild(image);

const textContainer = document.createElement('div');

textContainer.className = 'digest-card__text';

const title = document.createElement('div');

title.className = 'digest-card__title';

title.innerHTML = `<a href="${resource.link}">${resource.title}</a>`;

textContainer.appendChild(title);

const date = document.createElement('div');

date.className = 'digest-card__date';

date.innerHTML = `<span class="digest-card__label">${resource.date}</span>`;

textContainer.appendChild(date);

const authors = document.createElement('div');

authors.className = 'digest-card__items';

authors.innerHTML = `<span class="digest-card__label">Author(s) - </span>${resource.authors.map(author => `<span><a href="${author.link}">${author.name}</a></span>`).join(', ')}`;

textContainer.appendChild(authors);

topSection.appendChild(textContainer);

card.appendChild(topSection);

const description = document.createElement('div');

description.className = 'digest-card__summary';

description.textContent = resource.description;

card.appendChild(description);

const keywords = document.createElement('div');

keywords.className = 'digest-card__keywords';

keywords.innerHTML = `<span class="digest-card__label">Keywords: </span>${resource.keywords.join(', ')}`;

card.appendChild(keywords);

container.appendChild(card);

});

}

fetchResources();

// Function to fetch and display GDP data

async function fetchGDPData() {

const response = await fetch('web_content/data/gdp-data.csv');

const data = await response.text();

const parsedData = Papa.parse(data, { header: true }).data;

const labels = parsedData.map(row => row.Date);

const gdpValues = parsedData.map(row => parseFloat(row.GDP));

const ctx = document.getElementById('gdpChart').getContext('2d');

new Chart(ctx, {

type: 'line',

data: {

labels: labels,

datasets: [{

label: 'US GDP',

data: gdpValues,

borderColor: 'rgba(75, 192, 192, 1)',

backgroundColor: 'rgba(75, 192, 192, 0.2)',

borderWidth: 1

}]

},

options: {

responsive: true,

scales: {

x: {

display: true,

title: {

display: true,

text: 'Year'

}

},

y: {

display: true,

title: {

display: true,

text: 'GDP (in billions)'

}

}

},

plugins: {

tooltip: {

enabled: true,

mode: 'nearest',

intersect: false,

callbacks: {

label: function(context) {

let label = context.dataset.label || '';

if (label) {

label += ': ';

}

if (context.parsed.y !== null) {

label += new Intl.NumberFormat('en-US', { style: 'currency', currency: 'USD' }).format(context.parsed.y);

}

return label;

}

}

}

}

}

});

}

fetchGDPData();

</script>

<div class="container">

<h2>Interactive Graph</h2>

<p>The following graph contains United States nominal Gross Domestic Product data from Q1 1947 to Q1 2024. Data is courtesy of the Federal Reserve Bank of St. Louis "FRED" service. </p>

<div class="container">

<canvas height="200" id="gdpChart" width="400"></canvas>

</div>

</div>

</body>

</html>

Once it's a BeautifulSoup object, it's pretty easy to get the data you want. The key is selecting the right elements using the correct tags.

We'll use for loops for this.

# Select elements corresponding to table rows

elements = soup.select('tr.data-row')

# Initialize lists for data output

Companies = []

PPEs = []

for el in elements:

company = el.find('td', class_='company').text

ppe = el.find('td', class_='profit-per-employee').text

# Add data to lists

Companies.append(company)

PPEs.append(ppe)

print(Companies)

print(PPEs)

['ConocoPhillips', 'Fannie Mae', 'Freddie Mac', 'Valero', 'Occidental Petroleum', 'Cheniere Energy', 'ExxonMobil', 'Phillips 66', 'Marathon Petroleum', 'Chevron', 'PBF Energy', 'Enterprise Products', 'Apple', 'Broadcom', 'HF Sinclair', 'D. R. Horton', 'AIG', 'Lennar', 'Energy Transfer', 'Pfizer', 'Netflix', 'Microsoft', 'Alphabet', 'Meta', 'Qualcomm'] ['$1,970,000', '$1,510,000', '$1,190,000', '$1,180,000', '$1,110,000', '$921,000', '$899,000', '$848,000', '$815,000', '$809,000', '$798,000', '$752,000', '$609,000', '$575,000', '$560,000', '$433,000', '$392,000', '$384,000', '$379,000', '$378,000', '$351,000', '$329,000', '$315,000', '$268,000', '$254,000']

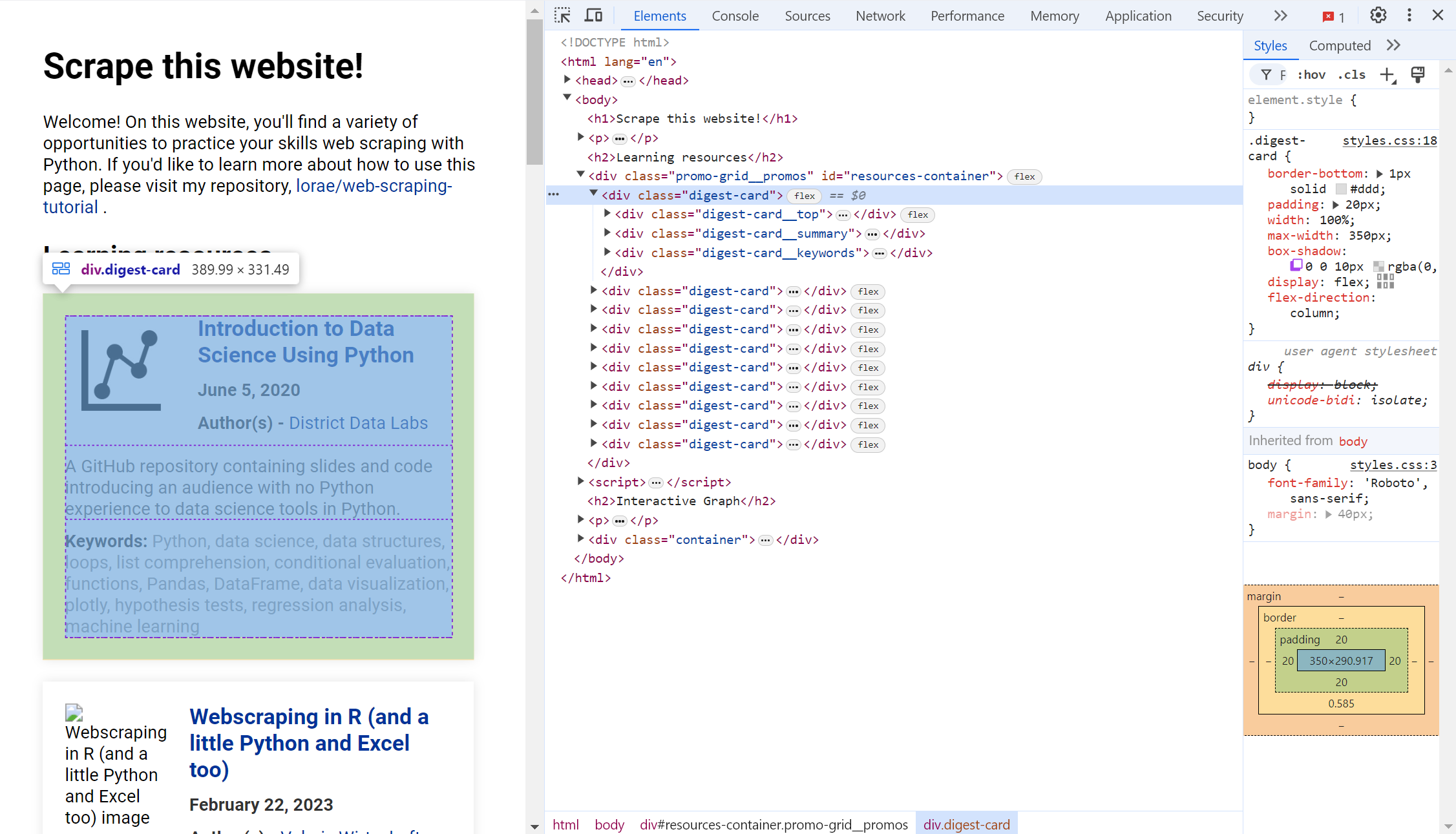

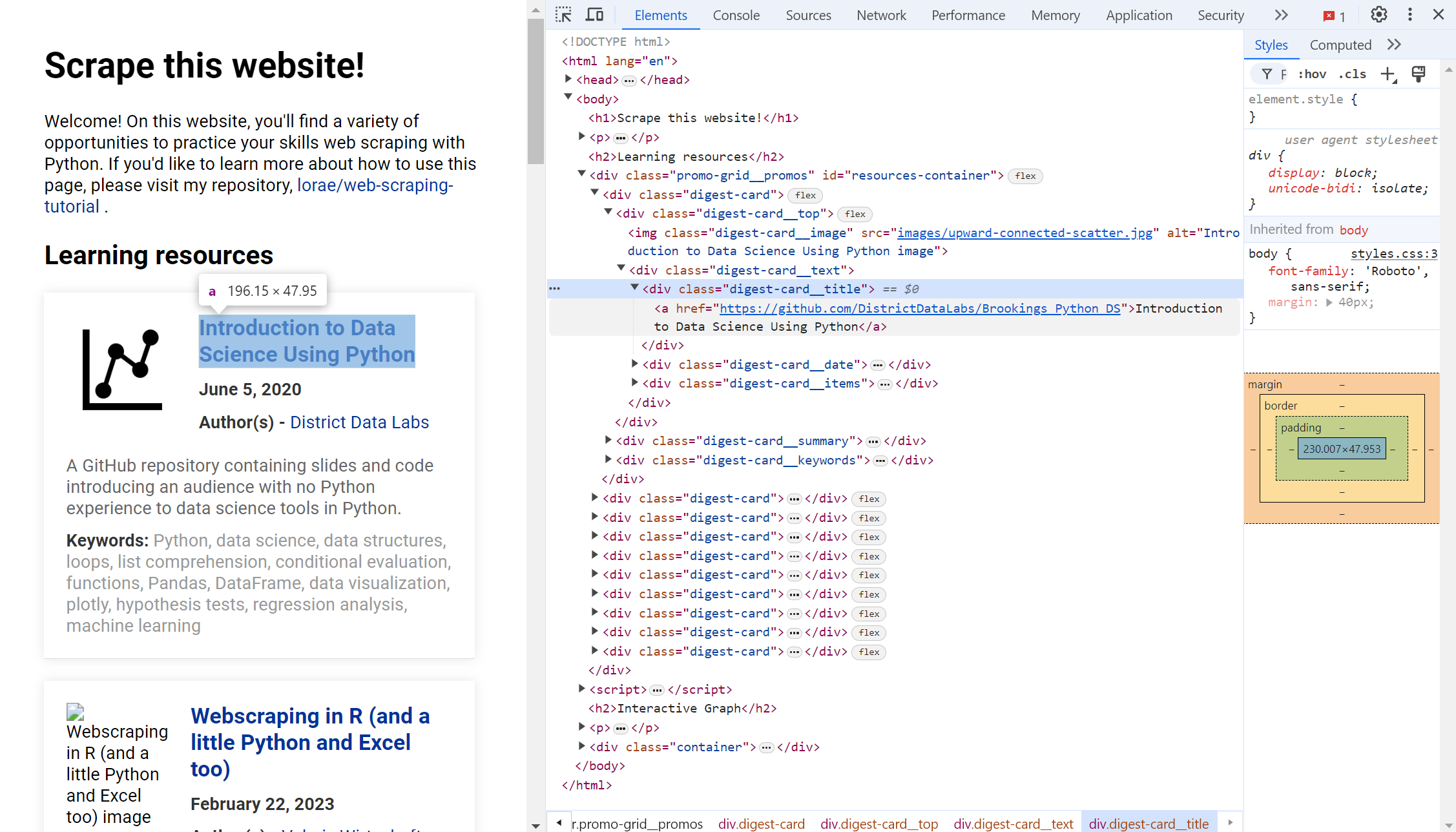

Wow, we're pros! Should we try another one? Let's get titles and links of learning resources listed on the website. First we inspect element to find the element and class:

Data on web scraping learning resources are continained in a <div> element with class digest-card. The title of the resource is in a child <div> element with class digest-card__title: more specifically, the text is stored in an <a> element, with the hyperlink stored in the href attribute.

# Scrape titles and links

elements = soup.select('div.digest-card__title a')

# Initialize lists

Titles = []

Links = []

for el in elements:

print(el)

# Obtain the link to the resource

link = el['href'] # 'href' is a HTML lingo for hyperlinks.

# Obtain the title of the resource

title = el.text

# Append the entries to each list

Titles.append(title)

Links.append(link)

# Print the results

print(Titles)

print(Links)

[] []

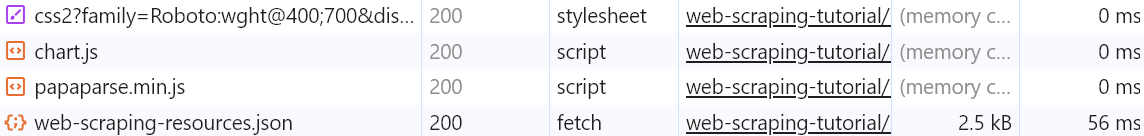

Why didn't this work?

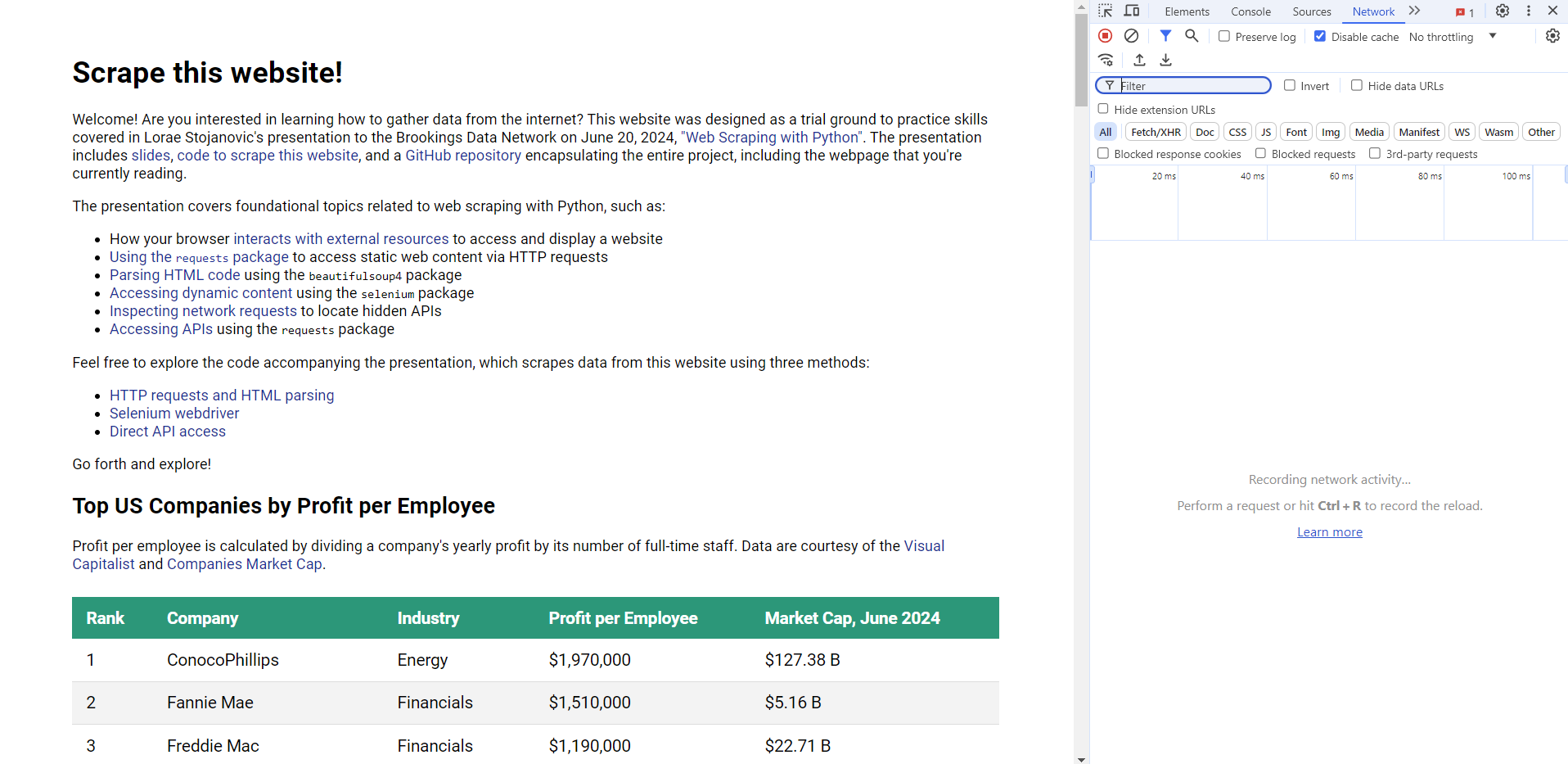

This part of the webpage is rendered using JavaScript! This is becoming an increasingly common occurence on today's web, which is why pure HTML scraping is becoming less and less feasible.

How do you know JavaScript is the culprit?

- Your code has no syntax errors yet doesn't pick up elements from the HTML code

- "View page source" shows no hard-coded elements

- "Network requests" reveal the JavaScript files used to populate the page

Sample code: selenium¶

selenium is a tool in Python (and many other programming languages) that allows users to access dynamic web content by automating web browser interactions.[12]

It simulates a real user browsing the web, which enables it to capture JavaScript-rendered content and other dynamic elements that one-off HTTP requests cannot access.

[12] Selenium documentation can be found here: https://www.selenium.dev/documentation/webdriver/getting_started/

But first, a note...¶

Selenium is just a tool to get an HTML file. Once you obtain the file, you parse it exactly the same way as we did in the previous section: using a tool of choice, like Beautiful Soup.

Let's start by importing the needed modules.

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.options import Options

from webdriver_manager.chrome import ChromeDriverManager

from bs4 import BeautifulSoup

selenium can run on many browsers, like Chrome and Firefox. For simplicity, we will use Chrome today.

In order for the code to work, you must have Chrome installed on your computer.

# Set up Chrome options

chrome_options = Options()

chrome_options.add_argument("--headless")

If you don't turn on "headless" mode, your browser will pop up on your screen when you run the code.

# Set up Chrome driver

service = Service(ChromeDriverManager().install())

driver = webdriver.Chrome(service=service, options=chrome_options)

# Open the website

url = "https://lorae.github.io/web-scraping-tutorial/"

driver.get(url)

# Get the HTML content of the page

html_content = driver.page_source

print(html_content)

<html lang="en"><head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Web Scraping Resources</title>

<link rel="stylesheet" href="web_content/css/styles.css">

<link href="https://fonts.googleapis.com/css2?family=Roboto:wght@400;700&display=swap" rel="stylesheet">

<script src="https://cdn.jsdelivr.net/npm/chart.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/PapaParse/5.3.0/papaparse.min.js"></script>

</head>

<body>

<div class="container">

<h1>Scrape this website!</h1>

<p>Welcome! Are you interested in learning how to gather data from the internet? This website was designed as a trial ground to practice skills covered in Lorae Stojanovic's presentation to the Brookings Data Network on June 20, 2024, <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">"Web Scraping with Python"</a>. The presentation includes <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">slides</a>, <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html">code to scrape this website</a>, and a <a href="https://github.com/lorae/web-scraping-tutorial">GitHub repository</a> encapsulating the entire project, including the webpage that you're currently reading.</p>

<p>The presentation covers foundational topics related to web scraping with Python, such as:</p>

<ul>

<li>How your browser <a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/2/5">interacts with external resources</a> to access and display a website</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/4/2">Using the <code>requests</code> package</a> to access static web content via HTTP requests</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/4/9">Parsing HTML code</a> using the <code>beautifulsoup4</code> package</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/5">Accessing dynamic content</a> using the <code>selenium</code> package</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/6/1">Inspecting network requests</a> to locate hidden APIs</li>

<li><a href="https://lorae.github.io/web-scraping-tutorial/advanced-web-scraping.slides.html#/6/10">Accessing APIs</a> using the <code>requests</code> package</li>

</ul>

<p>Feel free to explore the code accompanying the presentation, which scrapes data from this website using three methods:</p>

<ul>

<li><a href="#">HTTP requests and HTML parsing</a></li>

<li><a href="#">Selenium webdriver</a></li>

<li><a href="#">Direct API access</a></li>

</ul>

<p>Go forth and explore!</p>

<h2>Top US Companies by Profit per Employee</h2>

<p>Profit per employee is calculated by dividing a company's yearly profit by its number of full-time staff. Data are courtesy of the <a href="https://www.visualcapitalist.com/profit-per-employee-top-u-s-companies-ranking/">Visual Capitalist</a> and <a href="https://companiesmarketcap.com/">Companies Market Cap</a>.</p>

<table class="styled-table">

<thead>

<tr class="header-row">

<th class="header-cell rank">Rank</th>

<th class="header-cell company">Company</th>

<th class="header-cell industry">Industry</th>

<th class="header-cell profit-per-employee">Profit per Employee</th>

<th class="header-cell market-cap">Market Cap, June 2024</th>

</tr>

</thead>

<tbody>

<tr class="data-row">

<td class="data-cell rank">1</td>

<td class="data-cell company">ConocoPhillips</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,970,000</td>

<td class="data-cell market-cap">$127.38 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">2</td>

<td class="data-cell company">Fannie Mae</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$1,510,000</td>

<td class="data-cell market-cap">$5.16 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">3</td>

<td class="data-cell company">Freddie Mac</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$1,190,000</td>

<td class="data-cell market-cap">$22.71 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">4</td>

<td class="data-cell company">Valero</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,180,000</td>

<td class="data-cell market-cap">$49.39 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">5</td>

<td class="data-cell company">Occidental Petroleum</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$1,110,000</td>

<td class="data-cell market-cap">$54.31 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">6</td>

<td class="data-cell company">Cheniere Energy</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$921,000</td>

<td class="data-cell market-cap">$36.88 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">7</td>

<td class="data-cell company">ExxonMobil</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$899,000</td>

<td class="data-cell market-cap">$490.67 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">8</td>

<td class="data-cell company">Phillips 66</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$848,000</td>

<td class="data-cell market-cap">$57.59 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">9</td>

<td class="data-cell company">Marathon Petroleum</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$815,000</td>

<td class="data-cell market-cap">$60.75 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">10</td>

<td class="data-cell company">Chevron</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$809,000</td>

<td class="data-cell market-cap">$280.40 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">11</td>

<td class="data-cell company">PBF Energy</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$798,000</td>

<td class="data-cell market-cap">$5.10 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">12</td>

<td class="data-cell company">Enterprise Products</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$752,000</td>

<td class="data-cell market-cap">$61.45 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">13</td>

<td class="data-cell company">Apple</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$609,000</td>

<td class="data-cell market-cap">$3,245 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">14</td>

<td class="data-cell company">Broadcom</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$575,000</td>

<td class="data-cell market-cap">$839.05 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">15</td>

<td class="data-cell company">HF Sinclair</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$560,000</td>

<td class="data-cell market-cap">$10.01 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">16</td>

<td class="data-cell company">D. R. Horton</td>

<td class="data-cell industry">Construction</td>

<td class="data-cell profit-per-employee">$433,000</td>

<td class="data-cell market-cap">$45.90 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">17</td>

<td class="data-cell company">AIG</td>

<td class="data-cell industry">Financials</td>

<td class="data-cell profit-per-employee">$392,000</td>

<td class="data-cell market-cap">$49.19 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">18</td>

<td class="data-cell company">Lennar</td>

<td class="data-cell industry">Construction</td>

<td class="data-cell profit-per-employee">$384,000</td>

<td class="data-cell market-cap">$40.93 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">19</td>

<td class="data-cell company">Energy Transfer</td>

<td class="data-cell industry">Energy</td>

<td class="data-cell profit-per-employee">$379,000</td>

<td class="data-cell market-cap">$52.18 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">20</td>

<td class="data-cell company">Pfizer</td>

<td class="data-cell industry">Healthcare</td>

<td class="data-cell profit-per-employee">$378,000</td>

<td class="data-cell market-cap">$155.32 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">21</td>

<td class="data-cell company">Netflix</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$351,000</td>

<td class="data-cell market-cap">$295.45 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">22</td>

<td class="data-cell company">Microsoft</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$329,000</td>

<td class="data-cell market-cap">$3,317 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">23</td>

<td class="data-cell company">Alphabet</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$315,000</td>

<td class="data-cell market-cap">$2,170 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">24</td>

<td class="data-cell company">Meta</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$268,000</td>

<td class="data-cell market-cap">$1,266 B</td>

</tr>

<tr class="data-row">

<td class="data-cell rank">25</td>

<td class="data-cell company">Qualcomm</td>

<td class="data-cell industry">Tech</td>

<td class="data-cell profit-per-employee">$254,000</td>

<td class="data-cell market-cap">$253.43 B</td>

</tr>

</tbody>

</table>

<h2>Learning resources</h2>